Hello World,

if you want to read part I, click here

Today, we will continue our discussion about the PROXMOX VE product. In my previous posts, I described how to deploy PROXMOX VE and how to create the virtual machines (KVM or OpenVZ).

Storage Model

In the beginning, PROXMOX VE was using only local disks as storage.. This was acceptable in scenarios where you wanted to minimize the cost of the storage infrastructure. Live Migration was an option even if you were using local disks. Since version 1.4, the PROXMOX VE team has modified the storage model. Since this version, you can use ISCSI technology, NFS Stores, shared directories and LVM groups.

Let’s quickly go through them !

Using Local disks

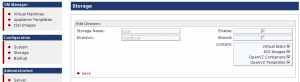

As in the previous version, you can create your virtual machines and stored them on the local disks. You do not need to perform any specific configuration on your system. After a default installation of PROXMOX VE, you can check the storage configuration. In order to perform this, you can go to the configuration section and select the storage link and a web page listing your local storage should be displayed. (see screenshot below)

If you click on the array next to the local disk and select edit, you can see the following screen

In the Edit Directory page, you can see the directory and the type of object that you can store on it. On the default local storage, you can store Virtual Disks, ISO images, OpenVz Container and OpenVZ Templates.

By default, you cannot store your backups on this local storage. If you go to the backup section and try to perform a backup you will get an error message stating that no storage has been defined. I haven’t test it yet but I think that if you have a system with multiple disks, and if you create a “directory” or LVM group storage type you will be able to store your backups locally.

Using Directory (on Windows or SAMBA Server)

This can be convenient when your infrastructure is mainly based on Windows servers and you do not have any ISCSI infrastructure. This is a good practice to store some of your files (backups for example) on a different machine that the PROXMOX VE machine.

In order to use a Windows Directory in conjunction with PROXMOX VE, you first need to create a Shared folder. Let say, we want to store our backups in the following location\\FileServer\pvebackup. Do not forget to grant correct read/write rights to the appropriate user accounts.

On your Proxmox VE machine, you need to create a “container” in order to mount the content of the windows shared folder. At the moment, this operation cannot be performed through the GUI. You have to go through the console. You will need to type something similar to this.

mkdir /mnt/pvebackup

Here, we have simply created a folder on the PVE host. This folder will be used to mount the content of the windows Directory we want to use for backups files.

From a command line, you need to edit the /etc/fstab file in order to have the directory folder presented at boot time to your PVE machine. You type the following command

nano /etc/fstab

The file will open and we will need to end (at the end of this file) the following command

//<%servername%>/%sharename% /mnt/pvebackup cifs username=<%username%>, password=<%password%>,domain=<%domainname%> 0 0

Generic command (replace % % variables with your current values)

Finally, you will need to connect to your shares by using the following command

Mount //<%servername%>/<%sharename%>

A real world example

On my windows box, I’ve created a directory Called PVEDIR and created 3 subfolder inside (for demo purposes).

- pveiso (that will be used to store iso files)

- pveimages (that will be used to store virtual disks)

- pvebkf (to store backups of your virtual machines)

I’ll be sharing the PVEDIR folder and grant read/Write access to the appropriate accounts

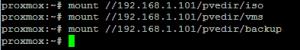

On my PVE Host machine, I have created from the console the mounting points

Then I’ve updated my /etc/fstab file. It look like the following screenshot

Then I issue the mount command for all the 3 shared folders I want to connect to

Your infrastructure is ready. You can now populate the shared directory with the appropriate content.

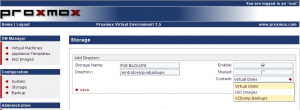

So far, we have prepared the PVE host to be able to connect to the Windows Directory. Now, it’s time to tell Proxmox ve that storage is available and ready to be used. You need to go back to the Web Interface. Select the Configuration section and click on Storage.

In the storage page, click the arrow next to the storage list title and select “Add Directory”

You will be redirected to the following screen. You simply need to provide the requested information. Note that you have to specify the content. Here, you can store Virtual Disks, ISO images and Backup files.

Click on save.

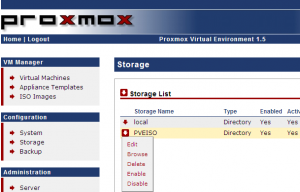

You will come back to the Storage page and you should see the new storage listed here.

If you click next to the new storage you’ve created on the arrow, you will see that you can manage it through a limited set of command. You can edit, Browse, Delete, Enable and disable this storage if needed

To populate your directory, you have multiple options, you can use the web interface or simply copying files (from another windows machine) to this shared directory.

If you use the web interface and you have set the content type to ISO images, when you browse to the ISO Images page (in the VM Manager section), you will notice that the additional storage is listed in the ISO Storage dropdown list box.

If the content type was set to Virtual Disks, you would find back the additional storage option in the create virtual machine page. Here again, in the Disk Storage dropdown box list, you can specify where to store your virtual machine locally or on the directory you’ve just made available.

This is a cool feature and allows company with tight budget to simply use the existing infrastructure.

Note: This is also working with Linux SAMBA server

Using NFS Server

PROXMOX VE can also work with NFS Server. I’ll not explain (in this post) how to setup a NFS server but if your company is using NFS technology, it is easy to configure PROMOX VE to use NFS as a possible storage location.

Here, you do not need to use command line. You simply open your Web interface, go to the configuration section and click on storage. On the arrow next to the storage list click on it and select Add NFS Share.

You will be redirected to the following screen. Simply enter the requested information and do not forget to specify the content type. Using NFS Shares, you can perform live migration as well

Using iscsi Infrastructure

Again here, we will not show you how to configure your ISCSI infrastructure. You can use OpenFiler or FreeNAS open source software to test or to build up an ISCSI infrastructure for your company.

We assume that your ISCSI targets are ready to be used. We will simply demonstrate how to use ISCSI infrastructure as storage alternative for your PROXMOX VE infrastructure. Note, however, that ISCSI storage can contain only virtual disks (no backup or no ISO files)

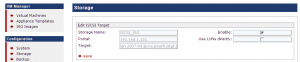

As with the NFS Share, we can perform the full operation directly from the web interface. Go to the configuration section and click on storage. On the arrow next to the storage list click on it and select Add iscsi target.

You will be redirected to the following screen. Enter the name you want to use, enter the Portal name and you can click on scan in order to select which iscsi target you want to use. When done, press save.

Using this storage, you have also access to the live migration feature of PROXMOX VE

Using LVM Group

I’m really not an expert on this technology but from what I can read from the PROXMOX VE wiki, the technology sounds interesting.

“Using a LVM group provides the best manageability. If the base storage for the LVM group is accessible on all Proxmox VE nodes (e.g. a iSCSI LUN, the LVM group can be shared and live-migration is possible.” (extract from proxmox ve wiki)

LVM provides some flexibility in terms of disks management functionalities. Using LVM, you can add or replace disk copy and share contents from one disk to another without service disruption. You can also repartition on the fly your disks

I haven’t test all the feature of LVM group for the storage. I’ve followed the instructions available on the proxmox ve wiki web site. In order to create LVM groups, you first need to create iscsi target to be used as base for your LVM group.

Let’s start by checking that a iscsi target exists or make one available. If you need to add iscsi target, check the previous paragraph and ensure that the option “Use LUNs directly” is disabled. You should have a screen similar to this one.

Since we have a iscsi lun available we can create our LVM group. In the storage page, on the storage list arrow, select the option “Add LVM group”

In Add LVM Group page, you need to specify the base storage. This would be the iscsi you’ve previously created. You have to give a unique name in the Volume Group Name. You cannot change this name. Finally, ensure that the option Shared is enabled.

When you are happy with your setup click save and you will be redirected to the Storage page and see on your list your newly created LVM2 Group..

You can also create LVM Groups from Local devices (additional hard disks or usb disks). If you want to use a local disk, you will first need to go the console and create the physical volume and the volume group.

Briefly, you will first need to create the physical volume by typing the following command

pvcreate /dev/sdb1 (you have to identify your device within PROXMOX VE)

Then, you create the volume group by using the following command :

vgcreate local1 /dev/sdb1

As last step, you need to use the web interface to create your LVM Group using this new storage.

Note : I have to test a little bit more on the LVM groups because it’s not something I’m really familiar with. In a future post, I might provide more information about this storage model specifically.

That’s it for this post ! As you’ve seen PROXMOX VE offers a variety of options for storing the virtual machines. PROXMOX VE can be easily integrated in Windows infrastructure (using Directory storage option) or Linux/Unix Infrastructure (using NFS Shares). The most common configuration (nowadays) in virtualization architecture is to use SAN/NAS infrastructure. PROXMOX VE can use SAN/NAS infrastructure through the use of ISCSI Technology. PROXMOX VE allows you to build up virtualized infrastructure taking advantages of standards and cost-effective and standard technologies.

If you want to know more about PROXMOX VE, stay tuned ! We have just started our journey.

thanks man. that helped a lot.

Which is the best disk type – IDE, SCSI, VIRTIO and

which is the best Network Card choice for

Win32 guests on an NFS in FreeNAS?

How do we make ProxMox use the NFS on FreeNAS as a normal user instead of as root?

Hello Ap. Muthu,

Hmm not sure……

I’ve been “virtualizing” mainly windows guest machines and so far, I have to say that for compatibility reasons I’ve been using IDE Disk Type.

For the network interface: I’m using mainly the VIRTIO network type and so far I had really few issues. But sometimes (really exceptions) I have to change to a more common network interface (the e1000)

I didn’t use FreeNas from quite some times (because busy busy with work …) So, I will not be able to answer your question right away…. about the NFS share

but this could be the starting point of a future post….

Thank you for your comments….

Thank you also for some of the OpenVz appliances you are maintaining on the ProxMox Ve web site. 🙂 Great job

Hello,

many thanks for sharing these interesting and helpful informations regarding Proxmox.

Also thank you for the rest of the site, interesting and well done!

I have few questions regarding the storage model of Proxmox, please follow this scenario:

I need to set up an FTP service via proxmox VE.

I then create a linux machine (for example Ubuntu) and I set up the ftp service (pureftpd).

Now, I want to add to the virtual machine 1Tbyte Hard disk as home folder for all the ftp users.

Do I need to create a virtual hard disk of 1Tbyte (the full size of the real HD) and attach it to the VM FTP server?

After that is it possible for the others VM to access the same hard disk?

If the hard disk is a NAS and this nas need to be accessible from the VM but also from others pc, within the lan, is possible to share the content of this hard disk between different pc and virtual machines?

In case it is possible could you give me a tip on how to create the structure?

I hope my question it is clear and it make sense.

thank you

Sergio

Hello Sergio,

I’m currently abroad so I cannot check fully the scenario you want to implement.

If you want to share contents between virtual machines and/or physical machines, I would use iscsi targets or NFS inside the virtual machine and not creating a virtual disk to hold the data to be shared.

Depending on your NAS, you should be able to configure iscsi targets or NFS mount points and share that contents with other machines on your network

If you have Windows 7 on your network, you can still use NFS because you can install the Services for NFS from the Add/remove Program

You might try to connect the “shared virtual disk” to another virtual machine through the configuration file. But i’m pretty sure you will not be able to use the data or not all the data will be visible on the share disk.

Again, this is a quick answer with no testing behind but the “normal” way would be NFS or iscsi target (inside the virtual machine and not the storage used by PROXMOX VE to host the virtual disks)

Easiest option would be to simply share the data inside your virtual machine so other machine can use it

I hope this answer your question

Best regards

Simply say that you rock……… A very helpul work. God bless you.

Hello,

Many thanks for sharing about proxmox

I have problem using NAS on proxmox

The NAS has connect with proxmox, but if i want create CT on NAS storage,

I got error like this “Cannot change ownership to uid 0, gid 0:

Operation not permitted”

I hope my question it is clear and it make sense.

thank you

Afiq

Hello Afiq,

Can you provide a little bit more technical information ?

Which Type of NAS are you using (Freenas,openfiler,qnap,….).

Which kind of storage are you using NFS/ISCSI ? (I’m assuming that you are using NFS based on the error message

How did you configure the NFS permissions ? this sort of error message would appear if root has no access to the nfs share. if you are using nfs, you have to ensure that the no_root_squash option is enabled/activited

Hope this help

Thank you for your fast response Griffion 😀

I apologize if the information I have presented lack detail.

I use a Buffalo Tera Station NAS. Yes, you are right. I’m using NFS and I just configuring the NAS via the web config.

I don’t understand how to configure NFS permissions. Is it from the side or from the NAS software Proxmox? (web config or command line).

As you explained, the root should have access to the NFS share. How can I configure it? How also to enable no_root_squash option?

I am very new to Proxmox and very little documentation that I can understand. I hope your guidance.

Thanks for support

Hello Afiq,

After reading a bit about the Bufallo product, the nfs share is created with permissions set to nobody. you will need to change the permissions by performing the following

from a ssh or telnet session, connect to your buffalo box and edit the file /etc/exports

you should see something like rw,sync,all_squash,…

replace this line with the following command

rw,sync,no_root_squash,no_subtree_check

and then exportfs -ra

Try to remount the nfs share within your Proxmox ve infra and you should be good to go….

BUT this settings will be removed after a reboot, this not a persistent change (in a buffalo box)

Update: I’ve found this like this might help you more that the info I provided to you

http://brain.demonpenguin.co.uk/2010/03/02/there-be-buffalo-here/

Hope this Help

See ya

Thank you for your answer, Griffon 🙂

Your instructions were very helpful

God Bless You

I dont really care about leaving comments but your website and the tutorials you have about everything is just too good very well described and a JOB well done . keep up the good work your website help me a lot setting up from ZenLoadbalancer for exchange.

Thank you so much.

Thank you for the visit and the positive comments

will try to keep on 🙂

Till next time

See ya

Hi,

I found your article useful in creating LVM groups from my iscsi targets. My setup is:

1. Qnap NAS being used as iscsi host

2. I have 2 CentOS VMs in proxmox ve

I want to configure Oracle RAC using iscsi targets as shared storage. So far, I have:

1. created iscsi targets in proxmox

2. createing lvm groups for each of those targets

However, i am unable to see the above vg’s and lv’s from the centos vms. They are visible on my host machine. Here is an example:

kanwar@abhimanyu:~$ sudo vgscan -v

Wiping cache of LVM-capable devices

Wiping internal VG cache

Reading all physical volumes. This may take a while…

Finding all volume groups

Finding volume group “vg_crsvote”

Found volume group “vg_crsvote” using metadata type lvm2

Finding volume group “vg_vm”

Found volume group “vg_vm” using metadata type lvm2

Finding volume group “vg_data”

Found volume group “vg_data” using metadata type lvm2

kanwar@abhimanyu:~$ sudo lvscan -v

Finding all logical volumes

ACTIVE ‘/dev/vg_crsvote/crs-vote’ [2.00 GiB] inherit

ACTIVE ‘/dev/vg_vm/vm-100-disk-1’ [20.00 GiB] inherit

ACTIVE ‘/dev/vg_vm/vm-100-disk-2’ [20.00 GiB] inherit

ACTIVE ‘/dev/vg_vm/vm-101-disk-1’ [20.00 GiB] inherit

ACTIVE ‘/dev/vg_vm/vm-101-disk-2’ [20.00 GiB] inherit

ACTIVE ‘/dev/vg_vm/vm-102-disk-1’ [20.00 GiB] inherit

ACTIVE ‘/dev/vg_vm/vm-102-disk-2’ [20.00 GiB] inherit

ACTIVE ‘/dev/vg_data/Data’ [500.00 GiB] inherit

In proxmox ve, I have an iscsi target called i_crsvote and an lvm group lv_crsvote. How can i make it ‘visible’ to the VMs.

Oracle RAC requires that the storage be ‘raw’, not in terms of a raw device but more as ‘leave it to oracle to manage it’.

Finally, here’s what the CentOS vm can see:

[root@oralinvm01 ~]# vgscan -v

Wiping cache of LVM-capable devices

Wiping internal VG cache

Reading all physical volumes. This may take a while…

Finding all volume groups

Finding volume group “vg_oralinvm01”

Found volume group “vg_oralinvm01” using metadata type lvm2

[root@oralinvm01 ~]# lvscan -v

Finding all logical volumes

ACTIVE ‘/dev/vg_oralinvm01/lv_root’ [15.57 GiB] inherit

ACTIVE ‘/dev/vg_oralinvm01/lv_swap’ [3.94 GiB] inherit

So, just what was created to install the OS, not the new lv’s created off the iscsi targets.

Hope this is clear!

Thanks.

Hello there,

if I understand correctly, you have configured Iscsi disk and connected them to the PRoxmox VE server. Now, you are asking how to use these disks inside the virtual machines. Correct

You cannot (or you should not). The iscsi target disks you have created and connected to the Proxmox VE will be used as storage for your virtual disk files.

If you need to configure Oracle RAC where an iscsi target disk is needed, you will need

– To create additional iscsi target (to be used by the VM only)

– to configure the isci initiator within the Virtual machines

– connect these iscsi target disks to the vm directly

Hope this help

Till Next time

See ya